Apple’s 3D scanner will change everything

This story was first published at The Aleph Report. If you want to read the latest reports, please subscribe to our newsletter and our Twitter.

On September, Apple announced the iPhone X. A new feature, called FaceID, will allow the unlocking the phone through facial recognition. This single feature will have profound implications across other industries.

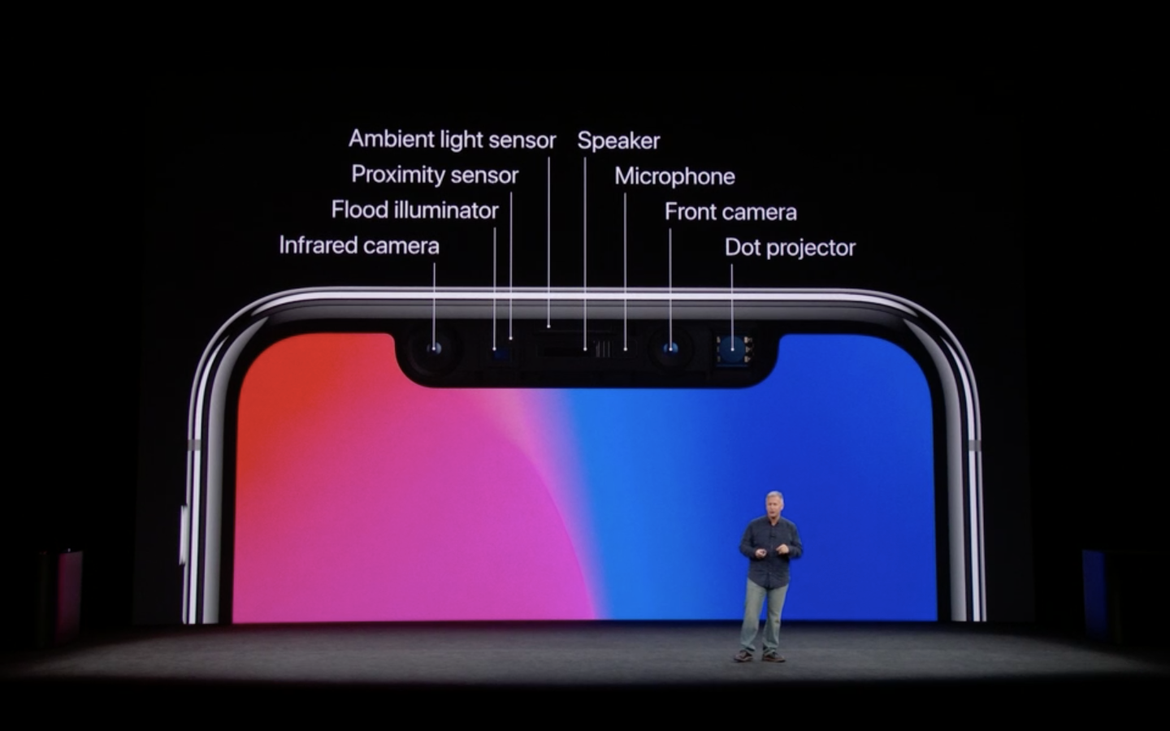

Apple dubbed the camera TrueDepth. This sensor allows the phone not only to perceive 2D but to gather depth information to form a 3D map.

It’s, in a nutshell, a 3D scanner, right in your palm. It’s a Kinect embedded in your phone. And I bring up Kinect because it’s the same technology. In November of 2013, Apple acquired the Israeli 3D sensing company PrimeSense, the technology behind Kinect, for 360 million dollars.

PrimeSense’s technology uses what’s called structured light 3D scanning. It’s one of the three main techniques employed to do 3D scanning and depth sensing.

Structured light 3D scanning is the perfect method to embed on the phone. It doesn’t yield a massive sensing range (between 40 centimeters to 3.5 meters), but it provides the highest depth accuracy.

Depth accuracy is critical for Apple. The sensor is the grounding stone of Face ID, their facial authentication system (PDF). If you’re going to use faces to unlock your life, you better be sure you have a high accuracy to avoid face-fraud.

Is this new?

This technology, though, has been around for a while. PrimeSense pioneered the first commercial depth-sensing camera with Kinect in November of 2010.

Two years later, in early 2012, Intel started developing their deep sensing technology. First called Perceptual Computing; it later renamed to Intel RealSense.

On September of 2013, Occipital releases their Structure Sensor campaign on Kickstarter. They raised 1.3 million dollars, making it one of the top campaigns of the day.

But despite the field heating up, the uses of the technology remained either desktop-bonded or gadget-bonded.

In 2010, PrimeSense was already trying to miniaturize their sensor so it could run on a smartphone. It would take them seven years (and Apple’s resources) to finally be able to deliver on that promise in the form of the iPhone X.

The feat is quite spectacular. Apple managed to fit the Kinect on a smartphone, while keeping the energy-hungry sensors in check, beating everyone, including Intel and Qualcomm, to the market.

What will this entail

Several technological and behavioral changes are converging in the field. On one side, we see a massive improvement of computer vision systems. Deep Learning algorithms are pushing the performance of such systems to human-expertize parity. In return, such systems are now available as cloud-based commodities.

At the same time, games like Pokemon Go, are building the core Augmented Reality (AR) behavior in users. Now, more than ever, people are comfortable using their phones to merge reality with AR.

On top of that, the current departure from text-based interfaces is beginning to change the behavior of users. Voice-only is turning into a reality, and it’s a matter of time until video-only becomes a norm too.

Having a depth sensor in a phone changes everything. What before took specialized hardware, is now accessible everywhere. What before fixed us to a specific location, is now mobile.

The convergence of user behavior, increased reliance on computer vision systems, and mobile 3D sensing technology is a killer.

The exciting thing is, Apple will turn depth-sensing technology into a commodity. Apple’s TrueFace though will limit many people’s new developments. These people will, in turn, look at more powerful sensors like Lidar or Qualcomm’s new depth sensors, catalyzing the whole ecosystem.

In other words, Apple is putting depth sensors on the table for everyone to admire. They’re doing that, not with technology, but with a killer application of the technology, Face ID. They’re showing the way to more powerful apps.

But can we build on top of this?

It’s hard to predict what will this combo be able to produce. Here are some ideas, but I’m sure we’ll see some surprising apps soon enough.

Photo editing and avatar galore

I’ll start with the obvious. Your Instagram feed and stories will become better than ever. Photos taken with the iPhone X will be able to render different depth focuses. TrueDepth will also enable users to create hyper-realistic masks and image gestures.

Gesture-based controls

While these interfaces have been around for a long time, Apple just moved them to the world’s platform, mobile. It’s a matter of time before we see these apps cropping in our smart-TVs or other surfaces.

Security and biometrics

Apple already demonstrated Face-based authentication. I guess this will be massively adopted everywhere. From banks to airport controls, to office or home access systems.

This technology could also aid in KYC systems, fraud prevention or speedier identity checks. An attractive field of application could also be forensics. The last one is something I thought about after the Boston bombings of 2013.

Could you stitch all the user-generated video of that day? Could you create a reliable 3D space investigators could use to analyze what happened?. Depth sensing cameras everywhere will make this a reality.

Navigational enhances

Depth sensing is also critical in several navigational domains. From Augmented Reality (AR) and Virtual Reality (VR), all the way to Autonomous Vehicles mapping necessities. Having a portable device that can enhance 3D maps could be a huge benefit for many self-driving car companies.

On top of that, layer the raising of drone-based logistics, and it’s complicated navigational issues. Right now many systems use Lidar sensors, but it’s easy to imagine how they’ll handle depth sensors.

For example, they could be used to identify where the recipient is located and verify their identity.

People tracking for ads

People-tracking technologies are being used by authorities for “security” purposes. With a small twist, we could also use depth-sensing technology to do customer tracking and efficient ad delivery in the physical world. Adtech is going to have a field day with this tech.

3D scanning

This is an obvious one. I would only add, Apple made 3D scanning, not only portable but ubiquitous. It’s a matter of time before we see it used in many different environments. Some uses might include active maintenance, Industrial IoT or the Real Estate industry.

Predictive health

One of those spaces this technology will revolutionize is eHealth and predictive medicine. The user will now be able to scan physical maladies and send them to their doctors.

In the same vein, it will also affect the fitness space, allowing for weight and muscle mass tracking.

Product detection

Depth-sensing will have immense repercussions for computer vision systems. It allows them to add a third dimension (depth) and speed up object recognition.

This will bring real image tracking and detection to our phones. We should expect better real-time product detection (and buying), or improvements in fashion-related products.

Mobile journalism

Last but not least, I’m intrigued by how journalists will use such technology. In the same way forensic teams might employ these systems, journalism can also benefit from it.

Mobile journalism (MoJo) is already an emerging trend but could be significantly enhanced by the use of 3D videos and depth recreations.

What should you be doing

We are on the verge of seeing an explosion of apps using this technology. Apple has already the ARKit on the market. The Android ecosystem is moving to adopt the ARCore one and finally delivering on Project Tango.

Any organization out there should devote some time to think how this technology can bring a new product to life. From Real Estate to Construction to Media, depth sensors are going to change how we interface with physical information.

Even if you don’t work with physical information, it’s worth thinking how this technology enables us to bridge both realities.

This space is going to move fast. Now that Apple has open the floodgates, all the Android ecosystem will follow. I suspect that in less than two-three years we’ll see a robust set of apps in this space.

“According to the new note seen by MacRumors, inquiries by Android vendors into 3D-sensing tech have at least tripled since Apple unveiled its TrueDepth.”

Following the app ecosystem, we’ll see a crop of devices embedding depth sensor technology beyond the phone, and it will eventually be all around us.

If you enjoyed this post, please share. And don’t forget to subscribe to our weekly newsletter and to follow us on Twitter!